- OpenTools' Newsletter

- Posts

- Razer Bets $600M on Gamers

Razer Bets $600M on Gamers

Plus Musk's China warning and A16z's predictions

Reading time: 5 minutes

🗞️In this edition

Gaming Company Razer Bets $600 Million That Gamers Will Adopt AI First

Sponsored: Your Product Grows. Your Support Team Doesn't Have To

Musk Says China Will Dominate AI Because of Electricity, Not Chips

Top VC Firm Says Video and Image AI Will Beat Text in 2026

In other AI news –

Infosys Deploys AI Software Engineer Devin Across Global Clients

Nvidia Unveils Robot Models While Lego Adds Chips to Bricks

Microsoft Buys Data Startup to Automate Work Engineers Hate

4 must-try AI tools

The constraints keep changing.

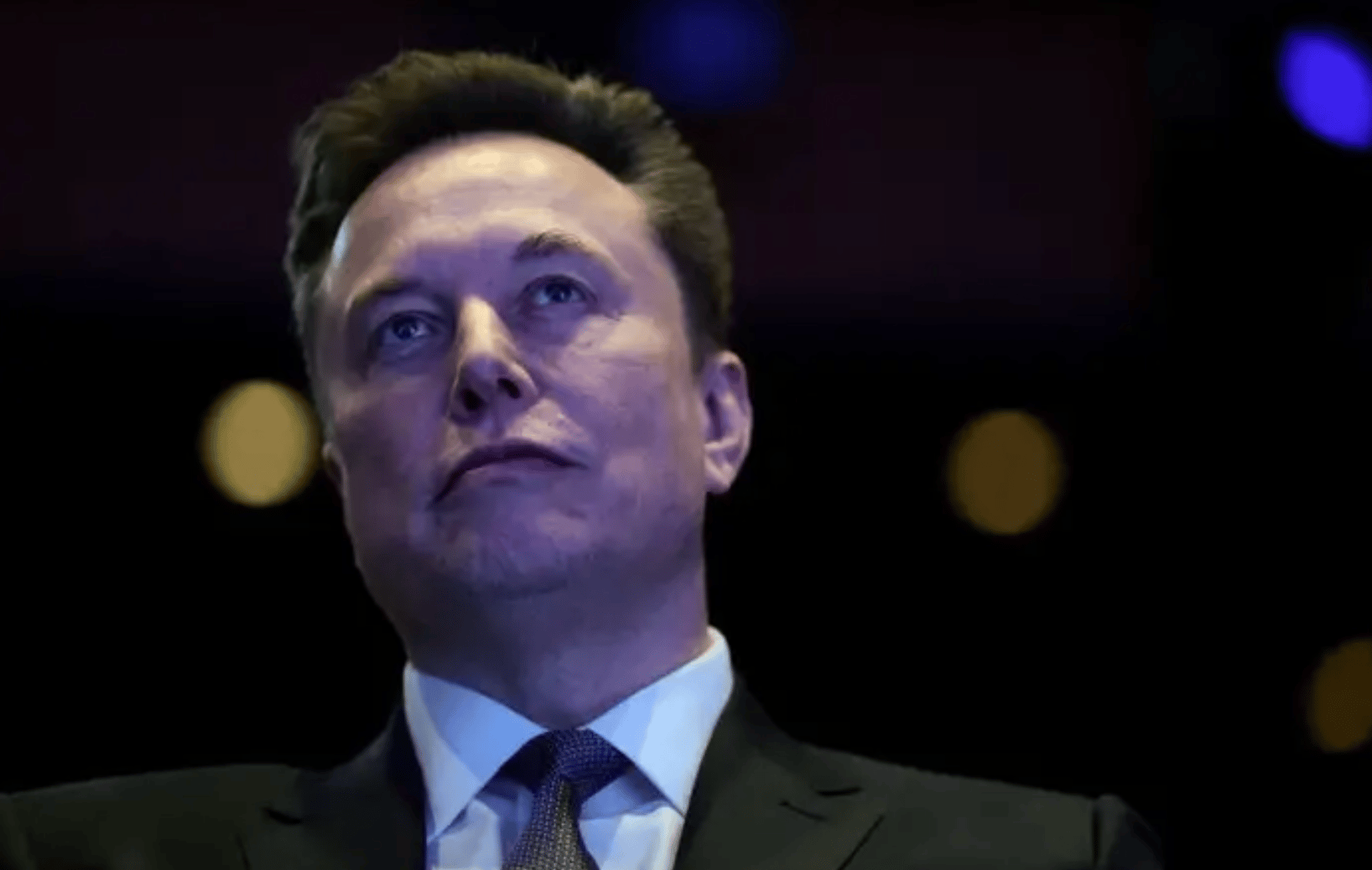

First AI needed algorithms. Then data. Then chips. Now Elon Musk says electricity determines who wins.

This week shows the assumptions shifting. Musk warns China's electricity advantage beats chip restrictions. Andreessen Horowitz predicts video beats text. Razer bets $600 million that gamers adopt AI first.

These aren't just predictions. They're different ways of thinking about what actually matters.

If you want to understand where this is heading, start here.

What's happening:

The gaming peripherals company unveiled several AI products at CES 2026. Project Motoko is AI headphones with built in cameras that work with OpenAI, Google Gemini, and xAI's Grok.

Project AVA is a desk based holographic avatar that gives physical form to AI assistants. It launches in the second half of 2026.

Razer also launched an AI tool for game developers called Razer QA Companion. The company says it helps catch 25 percent more bugs and reduces QA time by 50 percent. About 50 studios have already adopted it.

Razer went private in 2022, which the CEO says allows the company to focus on long term AI investments without quarterly earnings pressure.

The company believes gamers will adopt AI first, then the rest of the world will follow. Tan points to how gaming led innovation in social networks and chips.

The $600 million goes toward R&D, hiring scientists, and building internal tools. Razer is establishing AI centers in Singapore, Europe, and the United States.

Why this is important:

This matters because Razer is betting that gamers are the early adopters who will prove AI use cases for everyone else.

The gaming industry is projected to reach nearly $400 billion by 2033. AI gaming specifically could hit $28 billion, growing at 28 percent annually. That's a market worth chasing.

Razer's 150 million existing software users give them distribution advantage. They don't need to find new customers. They can sell AI features to people already using their platform.

The headphone approach is clever. Most people own headphones but not everyone wears glasses. Prescription lens wearers won't invest in expensive smart glasses. Headphones avoid that problem.

Project Motoko achieving 36 hours of battery life versus Meta's Ray-Ban at 6 hours shows hardware matters. If the AI works but the battery dies quickly, adoption fails.

Going private removes quarterly pressure. Public companies face investor scrutiny every three months. Private companies can spend $600 million without explaining immediate returns.

The developer tool getting adopted by 50 studios already shows commercial traction. This isn't just consumer experiments. Businesses are paying for Razer's AI.

The belief that gaming leads broader tech adoption has historical support. Chips, social features, and streaming all started in gaming before going mainstream.

Growing fast means drowning in support tickets.

CoSupport AI answers 90% of customer questions for you. Across chat, email, and social. In 40+ languages. With answers your customers actually trust.

Deploy in 10 minutes. No new hires. No training. Just immediate relief.

The result?

Save $4,600+ monthly per 1,000 tickets.

Get your evenings back.

Let your team focus on the questions that actually need a human.

Starting at $99/month. Unlimited replies. Unlimited users.

What's happening:

He made the prediction on the Moonshots with Peter Diamandis podcast published this week.

The reason isn't chips. It's electricity.

Musk estimates that China could reach about three times the electricity output of the US by 2026. That gives them capacity to support energy hungry AI data centers.

He says people are underestimating how hard it is to bring electricity online. That's now the limiting factor for scaling AI systems, not semiconductors.

While the US has focused on restricting China's access to advanced chips, Musk suggests those constraints may matter less over time if China has vastly more power generation.

His own company xAI is building massive data centers in Memphis that will consume nearly 2 gigawatts of power. That's enough electricity for roughly 1.5 million US homes.

The scaling constraint for AI has moved from chips to voltage transformers to electricity generation, according to Musk.

China's President Xi Jinping mentioned AI progress prominently in his New Year address, citing breakthroughs in domestic chip development and AI models.

Why this is important:

This matters because it reframes the AI competition around infrastructure, not just technology.

The US strategy has centered on denying China access to advanced semiconductors. Export controls block Nvidia's best chips from reaching Chinese companies.

But if Musk is right, that strategy misses the bigger bottleneck. You can have all the chips you want. Without electricity to power them, they're useless.

China is building power generation capacity at a pace the US isn't matching. If that gap widens, China gains an infrastructure advantage that's harder to counter than chip restrictions.

Electricity takes years to build. You can't just flip a switch and add gigawatts to the grid. Power plants, transmission lines, and transformers all require massive upfront investment and long construction timelines.

This also changes the economics of AI development. Companies will locate data centers where power is abundant and cheap. If China has three times the electricity capacity, they can support three times the AI infrastructure.

The environmental implications are significant. More power generation means more emissions unless it comes from renewable sources. China is building both renewable and fossil fuel capacity.

For the US, this suggests a strategic vulnerability. AI leadership requires not just research talent or chip manufacturing, but fundamental energy infrastructure that takes decades to scale.

Comments from the editor:

What stands out is how the competitive landscape keeps shifting.

First it was talent. Then it was data. Then chips became the chokepoint. Now Musk is saying electricity is the real constraint.

He's probably right. You can design better algorithms and manufacture advanced chips, but without power to run them, it doesn't matter.

The three times estimate for China's electricity capacity by 2026 is aggressive. That's less than a year away. Whether it's precisely accurate or directionally correct, the trend is clear.

The irony is that chip export controls may have accelerated China's focus on power infrastructure. If you can't get the best chips, you compensate by building more capacity with the chips you have.

Musk has skin in this game. His xAI facilities consume enormous amounts of power. He's dealing with these constraints directly, which gives his perspective credibility.

The Memphis data center drawing power equivalent to 1.5 million homes is staggering. That's more than many small cities consume. And it's just one facility.

The shift from chips to electricity also changes who matters in the AI race. It's not just tech companies anymore. It's utilities, energy companies, and governments that control grid infrastructure.

Whether the US can respond quickly enough is an open question. Building power generation takes years. By the time new capacity comes online, the gap may have widened further.

The real test is whether this prediction changes policy. If electricity is the constraint, chip export controls alone won't maintain US AI leadership.

What's happening:

Their main prediction is that image and video capabilities will become more important than text for winning users.

Justine Moore, an investment partner, said there's "nearly infinite demand" for image and video AI among both professionals and everyday users. Viral trends are created around new image and video model capabilities.

ChatGPT currently dominates with 800 to 900 million weekly active users. Gemini has reached about 35 percent of that scale on web and 40 percent on mobile. Other models like Claude and Perplexity are at 8 to 10 percent.

But Gemini is growing desktop users faster than ChatGPT. If Gemini maintains momentum with its video and image features, it could overcome ChatGPT's name recognition advantage.

The partners predict multimodality will become standard. That's the ability to process text, images, and audio all at once, not separately.

They also highlighted ChatGPT Enterprise growth. Weekly messages increased eightfold in 2025. If people have to use ChatGPT for work, that could translate into consumer usage.

Another partner, Olivia Moore, said ChatGPT's app store success will be its "defining question" for 2026. The SDK that lets developers build apps for ChatGPT could be crucial for growth.

Why this is important:

Text chatbots got all the early attention. But images and videos are what people share and what goes viral. That drives user acquisition faster than text features.

The gap between ChatGPT and competitors is large but not insurmountable. Going from 35 percent to 50 percent of ChatGPT's scale is achievable in a year if growth rates hold.

Multimodality changes how people use AI. Instead of switching between tools for different tasks, one tool handles everything. That increases stickiness and makes switching harder.

The enterprise angle is strategic. When companies force employees to use a tool for work, consumer adoption follows. People use what they're already familiar with.

ChatGPT's app ecosystem could be its moat. If developers build successful apps on the ChatGPT platform, those apps keep users locked in. That's the iOS playbook applied to AI.

The speed of change is notable. A16z partners said things are "changing very quickly" in the consumer AI race. Market position today doesn't guarantee market position tomorrow.

Specialized models like Claude catering to technical users shows there's room for multiple winners. Not everyone needs the same features.

Infosys Deploys AI Software Engineer Devin Across Global Clients – Infosys is rolling out Cognition's Devin AI coding agent to its entire engineering ecosystem and client base, six months after testing showed improvements in quality and efficiency.

Nvidia Unveils Robot Models While Lego Adds Chips to Bricks – CES 2026 brought robot foundation models from Nvidia, smart bricks from Lego, and new autonomous vehicle partnerships, showing AI moving from screens into physical products.

Microsoft Buys Data Startup to Automate Work Engineers Hate – Microsoft acquired Osmos to bring AI agents that automatically clean and prepare data into Fabric, tackling the tedious work that consumes most of data teams' time.

ThreadBois - An online tool that helps users create viral thread headers

Teach-O-Matic - A tool that helps users create how-to videos from text instructions

Watermelon - An all-in-one automated customer service tool powered by GPT-4

Audioatlas - Allows users to find the most suitable music from a vast global database of over 200M songs

AI stopped being predictable.

The constraints shift. The leaders change. The assumptions get challenged.

What's clear is that technology alone doesn't win. Infrastructure matters. Distribution matters. Understanding who adopts first matters.

That's what we're tracking. Not just breakthroughs, but the strategic thinking behind them.

If you have any feedback for us, please reply and let us know how we did. We're always looking to improve.

How did we like this version? |

Interested in featuring your services with us? Email us at [email protected] |