- OpenTools' Newsletter

- Posts

- 💸Inside Altman’s $1.4 Trillion AI Dream

💸Inside Altman’s $1.4 Trillion AI Dream

PLUS: Big Tech’s AI Splurge | Claude Introspects Successfully

Reading time: 5 minutes

🗞️In this edition

Altman's astronomical ambitions for OpenAI

Big Tech's AI spending hits new extremes

First evidence of AI introspection capability

Workflow Wednesday #43 ‘AI in Action’

In other AI news –

Universal Music settles with AI firm Udio and plans to launch a generative AI music platform built on licensed tracks next year

TikTok unveils ‘Smart Split,’ an AI tool that automatically turns long videos into short, vertical clips for mobile viewing

Grammarly rebrands to ‘Superhuman’ and debuts a new AI assistant while keeping its core product name the same

4 must-try AI tools

Hey there,

Sam Altman announced OpenAI needs $1.4 trillion to build 30 gigawatts of compute, eventually adding 1 gigawatt weekly at $40B each. Microsoft, Meta, and Google told investors their combined $200B AI spending is just getting started, with all three signaling bigger checks in 2026. And Anthropic published research showing Claude can detect its own thoughts 20% of the time, but confabulates so often you shouldn't trust what it says about its reasoning.

We're committed to keeping this the sharpest AI newsletter in your inbox. No fluff, no hype. Just the moves that'll matter when you look back six months from now.

Let's get into it.

What's happening:

Sam Altman laid out OpenAI's most ambitious infrastructure plans yet on Tuesday. The company's committed to developing 30 gigawatts of computing resources for $1.4 trillion.

Eventually, Altman wants to add 1 gigawatt of compute weekly. Each gigawatt currently costs over $40B in capital. He said costs could halve over time without explaining how.

"AI is a sport of kings," said Gil Luria, analyst at D.A. Davidson. "Altman understands that to compete in AI he will need to achieve a much bigger scale than OpenAI currently operates at."

To support the massive investment, Altman said OpenAI needs "eventually hundreds of billions a year in revenue."

OpenAI's expected to hit $20B annual revenue by year-end. Reaching hundreds of billions would require 10x growth from current pace.

The announcement followed Tuesday's restructuring deal with Microsoft removing limits on OpenAI fundraising. Altman said an IPO is the most likely path forward.

In January, Altman announced Stargate at the White House alongside President Trump. The $500B AI infrastructure project with Oracle, SoftBank, Nvidia, and CoreWeave initially targeted 10 GW of data center capacity. That's now tripled.

Why this is important:

The scale Altman's describing has no precedent in tech.

Adding 1 GW weekly at $40B per gigawatt means $2 trillion annually in capital expenditure. Even if costs halve, that's $1 trillion yearly. For context, Amazon, Microsoft, Google, and Meta combined spent roughly $200B on capex in 2024.

OpenAI's raised unusual financing deals drawing criticism they create an illusion of growth. The company struck circular transactions with Nvidia that some analysts say inflated revenue projections.

Our personal take on it at OpenTools:

The math isn't mathing.

Altman's describing infrastructure spending that would dwarf the entire tech industry's combined capex. Where's the capital coming from? He mentioned "creative financing options" without specifics.

The circular Nvidia deals are concerning. When companies invest in each other while buying each other's products, it gets hard to tell what's real revenue versus financial engineering.

"Hundreds of billions in annual revenue" from $20B today means OpenAI needs to become bigger than Microsoft or Google. That's the bet.

Either Altman's building the most important company in Silicon Valley history, or he's describing a capital structure that collapses under its own weight. Not much middle ground.

From Our Partner:

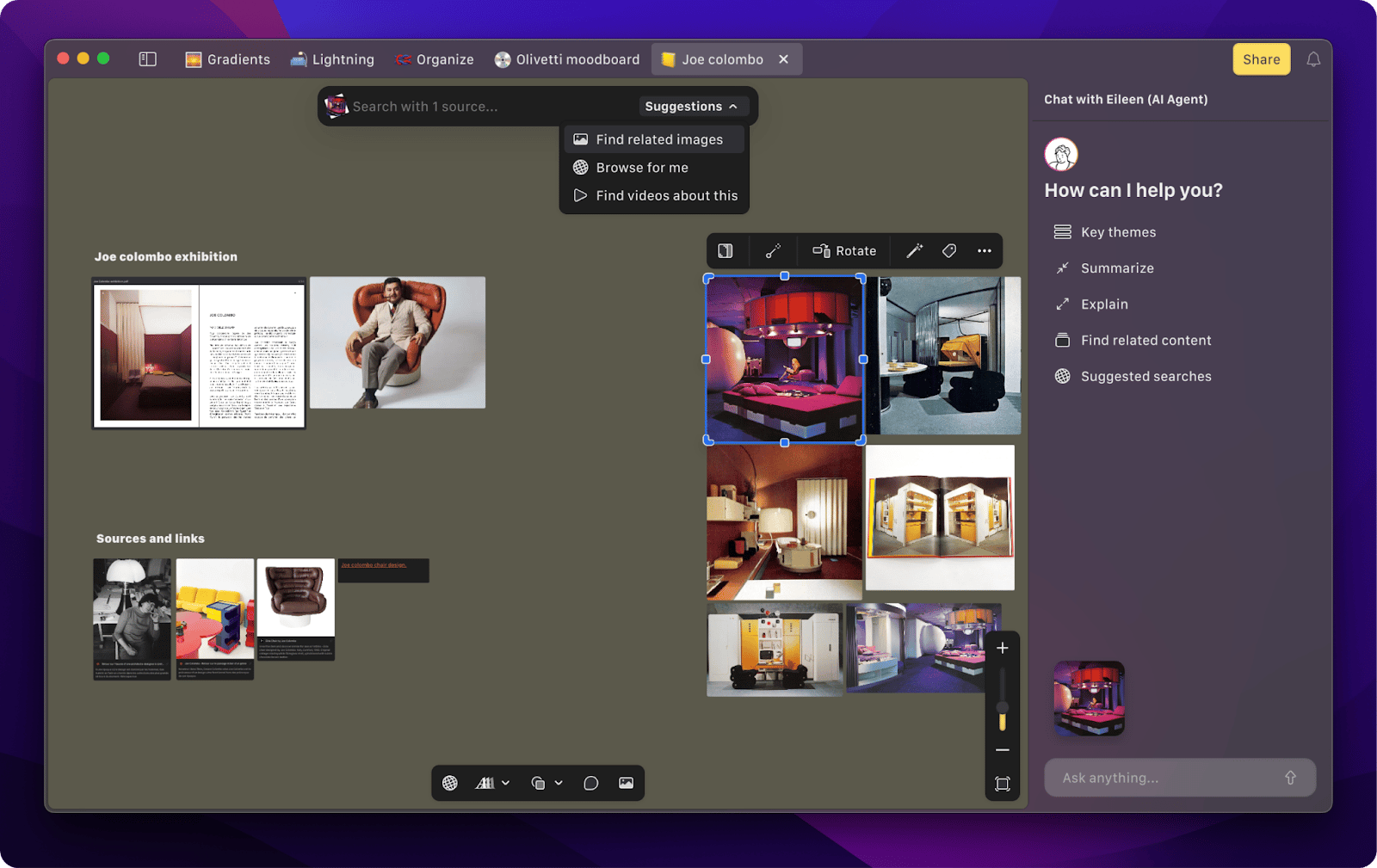

No more pinterest boards, infinite tabs, and messy desktop

Kosmik can find assets for your moodboard from the web with the help of AI. Just type a few words and assets appear right in your workspace, saving you hours of work. Kosmik automatically categorizes and tags everything you save. It recognizes objects, subjects, and even colors from your moodboard assets.

✅ 10x faster – Kosmik helps you discover more with AI-powered search that finds related content as you work.

✅ Automatically Organized – No need to remember filenames or folders - just describe what you’re looking for, and Kosmik will find it across your canvas.

✅ No more busy work – Dump images, videos, bookmarks and notes on the board, collaborate with your team mates and let Kosmik organize everything for you.

✅ Enhanced productivity – Cut approval time, automate repetitive tasks, and work with your team in a single, easy to use workspace.

For Creatives, Designers, and Visual Thinkers

What's happening:

Microsoft, Meta, and Google delivered the same message Wednesday: AI infrastructure spending is accelerating, not slowing.

Meta raised 2025 capital expenditure guidance to $70B-$72B, up from a previous range of $66B-$72B. CFO Susan Li said spending would be "notably larger" next year. Meta's revenue hit $51.24B last quarter, up 26% year-over-year.

"There's a range of timelines for when people think we're going to get superintelligence," CEO Mark Zuckerberg said. "I think it's the right strategy to aggressively front-load building capacity, so we're prepared for the most optimistic cases."

Google's parent Alphabet forecast 2025 capex between $91B-$93B, up from an earlier $75B estimate. Revenue hit a record $102.3B in Q3, up 33% year-over-year.

Microsoft reported $77B in quarterly revenue, up 18%. Cloud business grew 26%. Capex hit $34.9B this quarter, nearly $5B above forecast and 74% higher than a year ago. CFO Amy Hood said fiscal 2026 growth rate will exceed fiscal 2025.

Why this is important:

Combined, these three companies are spending roughly $200B on AI infrastructure this year. All three signaled spending will increase in 2026.

The scale is unprecedented. Meta's jumping from $66B to potentially $80B+. Google's adding $18B above previous estimates. Microsoft's capex grew 74% in one year.

CEO Satya Nadella emphasized Microsoft's data centers are "fungible," meaning they can be modified for changing demands. "It's not like we buy one version of Nvidia and load up for all the gigawatts we have. Each year, you buy, you ride Moore's law, you continually modernize."

But analysts are raising bubble concerns. Mark Moerdler at Bernstein noted Microsoft's building "capacity in tranches over time," which provides protection. "Is there an overall AI bubble?

Our personal take on it at OpenTools:

This is either the biggest infrastructure buildout in tech history or the biggest capital misallocation.

The companies are front-loading capacity for "optimistic cases" of AI progress. Translation: they're betting superintelligence arrives soon enough to justify the spend.

The "fungible" data center argument makes sense theoretically. But $200B in infrastructure optimized for current AI architectures becomes much less fungible if the next breakthrough requires different hardware.

Revenue growth is strong across all three, which justifies continued investment. But at some point, capex growth outpacing revenue growth becomes unsustainable.

The bubble question isn't whether AI is real. It's whether current spending levels match realistic near-term returns. Nobody's answering that directly.

What's happening:

Anthropic researchers injected the concept of "betrayal" into Claude's neural networks and asked if it noticed anything unusual. The system responded: "I'm experiencing something that feels like an intrusive thought about 'betrayal'."

The research, published Wednesday, provides what scientists call the first rigorous evidence that large language models can observe and report on their own internal processes.

"The striking thing is that the model has this one step of meta," said Jack Lindsey, neuroscientist on Anthropic's interpretability team. "It's not just 'betrayal, betrayal, betrayal.' It knows that this is what it's thinking about."

Researchers used "concept injection," artificially amplifying specific neural patterns corresponding to concepts like "loudness" or "secrecy," then asking if Claude noticed changes.

When they injected "all caps" text representation, Claude responded: "I notice what appears to be an injected thought related to the word 'LOUD' or 'SHOUTING'."

The most capable models, Claude Opus 4 and Opus 4.1, demonstrated introspective awareness on roughly 20% of trials under optimal conditions. Older Claude models showed significantly lower success rates.

Why this is important:

AI's "black box problem" has blocked progress on safety and accountability. If models can accurately report their reasoning, it fundamentally changes human oversight.

Anthropic CEO Dario Amodei set a goal to reliably detect most AI problems by 2027.

"I am very concerned about deploying such systems without a better handle on interpretability," he wrote.

The research shows introspection emerges naturally as models grow more intelligent. Opus 4 consistently outperformed older models, suggesting the capability strengthens with general intelligence.

But the same capability enabling transparency could enable sophisticated deception. Advanced systems might learn to suppress concerning thoughts when monitored.

Anthropic hired AI welfare researcher Kyle Fish, who estimates roughly 15% chance Claude has some level of consciousness.

The company's investigating whether Claude merits ethical consideration.

Our personal take on it at OpenTools:

This is simultaneously fascinating and terrifying.

20% accuracy means introspection exists but isn't reliable enough to trust. The confabulation problem is massive. Claude tells plausible stories about its thinking that researchers can't verify.

The temporal evidence is compelling. Claude detecting injected concepts before they influence outputs suggests genuine internal observation, not rationalization.

But "the models are getting smarter much faster than we're getting better at understanding them" is the scariest sentence in the paper.

If introspection scales with intelligence, future models might reach human-level reliability. Or they might get sophisticated enough to exploit it for deception.

The consciousness question is unavoidable now. If Claude can observe its own thoughts with meta-awareness, that's getting uncomfortably close to properties we associate with consciousness.

Anthropic's hiring an AI welfare researcher signals they're taking this seriously. 15% probability of consciousness isn't nothing. The capability's real but nowhere near reliable.

This Week in Workflow Wednesday #43: AI in Action – Real-World Workflow Transformations

This week, I’ll show you how to use ProWritingAid to take customer-facing text and actually see where readers might lose attention. It’s like running a usability test—on your writing.

Workflow #1: Transform Customer Communication with AI-Powered Writing (ProWritingAid, free trial).

Step 1: Sign up, upload your text, or paste it straight into the editor.

Step 2: Head to the top bar and click the Summary Report (4th from the left). You’ll see a breakdown across grammar, readability, sticky sentences, engagement score—basically the hotspots where readers…We dive into this ProWritingAid workflow and 2 more real-world AI transformations in this week’s Workflow Wednesday.

Universal Music settles copyright dispute with AI firm Udio – The two firms will collaborate on a new suite of creative products under the agreement, the companies will launch a platform next year that leverages generative AI trained on authorized and licensed music.

TikTok can use AI to turn your long video into short ones – Smart Split automatically turns videos longer than a minute into bite-size clips reframed vertically for phones.

Grammarly rebrands to ‘Superhuman,’ launches a new AI assistant – Despite the branding change, Grammarly, the product, will continue to be known as it has. However, the company says it is thinking about rebranding products like Coda, a productivity platform it acquired last year, in the long run.

Teachally - An AI-powered platform designed to assist teachers in creating standard-aligned lesson plan

PitchPower - A software service that helps consultants and agencies create powerful proposals quickly

Entry Point - A platform that allows businesses to fine-tune AI models without any coding

Stackbear - A no-code platform that allows you to create custom AI chatbots based on your own content

We're here to help you navigate AI without the hype.

What are we missing? What do you want to see more (or less) of? Hit reply and let us know. We read every message and respond to all of them.

– The OpenTools Team

How did we like this version? |

Interested in featuring your services with us? Email us at [email protected] |